Recently, a client I'm working with supplied me with a GitHub Copilot subscription to use during our work together. GitHub Copilot is a subscription-based service developed by both GitHub and OpenAI—the company behind the current tech sensation ChatGPT—that integrates with popular development environments to help coders with their work. GitHub's documentation calls Copilot "an AI pair programmer that offers autocomplete-style suggestions as you code". You can begin typing code, and Copilot will guess how to complete your coding, or it can generate new code based on natural language.

Right now, generative AI is the hot new trend all over the world. It seems like you can't find any place online nowadays that hasn't discussed ChatGPT to death. Every sector, from technology to business to sales and marketing, talks about AI and how it can help everyone in their respective industries. I recently visited a local bookstore— yes, we still have many of those here in Japan—and I saw at least a dozen newly-published books covering ChatGPT and AI. It's a trend that's not going away soon (unlike NFTs, but that's another story).

I've been using ChatGPT for a while, and it's been beneficial with a few minor chores, like sharpening some of my writing and providing ideas and inspiration for this blog. I also use it occasionally for software development purposes, like asking to give an example of how to do a specific task. While it sometimes helps me get unstuck with an issue, I found that ChatGPT works well under particular circumstances, and more often than I not, I would find myself discarding whatever it spits out.

My hands-on experience using GitHub Copilot

Admittedly, I was skeptical that GitHub Copilot would help me in my day-to-day tasks. I didn't think having functionality similar to ChatGPT available directly inside my code editor would boost my productivity in any measurable way. Based on what I've seen online, I'm not alone in this skepticism. Developers and testers frequently ask about the effectiveness of the service, questioning whether it lives up to GitHub's claims.

After a few weeks of hands-on use, my initial feelings about GitHub Copilot have shifted significantly. I've been pleasantly surprised at how much it's helped me in my day-to-day software development and testing work. While I haven't measured its effectiveness in any tangible way, I can say with certainty that if I were paying for the service, it would already have paid for itself from the time it's saved me.

I've seen lots of tweets, forum posts, and Slack messages from people who were just like me, wondering if GitHub Copilot is worth the cost or doubtful that it works at all. For this article, I wanted to share how it's helped me make the best use of my time so others can assess themselves.

Save time writing boilerplate code

Any time we begin a new project, we'll have to spend a lot of time getting things set up to get moving with the actual work. I don't know about most testers and developers, but I want to get to the fun stuff as quickly as possible, and this setup process often gets in the way. Most of the time, this initial setup process feels like a slog, especially when it's something that's familiar or unexciting to us. For instance, I can't tell you how many times I've felt like I'm sleepwalking through getting CI set up on GitHub Actions for a project or configuring a testing library I've used dozens of times.

One of the benefits of GitHub Copilot is that you can give it a couple of comments in your file, and it can spit out an initial version of this boilerplate code, so you don't always have to write everything from scratch. You won't have to go through the trouble of going through old projects or wading through pages of documentation to eventually find something you can copy and paste over as a starting point.

As an example, I recently started building an end-to-end test suite using TestCafe. One of the first things I do in this scenario is to create a configuration file with some basic settings that I typically set up for my TestCafe projects. Despite writing a book about TestCafe, I still have to search through TestCafe's documentation to remember the correct way to use some settings. Using GitHub Copilot, I can skip the Google search and write a comment directly in an empty TestCafe configuration file, which will create the settings I want. Here's an example of how it looks:

Side note: You may have noticed in the animated GIF above that GitHub Copilot only suggests one line at a time instead of providing the entire configuration in a single suggestion. It seems to be an issue with the Copilot service itself for some scenarios and not how it should work in normal circumstances. Hopefully, the team can correct this issue soon.

While it seems like there's little benefit in doing this as opposed to searching for what you need, it's actually a good timesaver since it handles everything right where you need it without any distractions. I don't know about you, but I get easily distracted whenever I shift away from my code editor and do something else. With Copilot, I've wasted less time looking at things unrelated to the task at hand, and it's added up to a ton of productivity in a short period.

You don't need to keep all the minute details in your head

No matter how many years of experience you have doing development or testing, you won't have every single detail of the tools you use in your head ready to fly off your fingertips. Even if you use the same libraries or frameworks for years, you'll still have to resort to doing quick online searches to refresh your memory. Someone once told me that one of the signs of being a good developer is your effectiveness in looking for solutions on Google. After 19 years of doing this for a living, there's an element of truth to that statement.

Despite using specific tools or libraries constantly on the job for years, most developers just need a good refresher to get them moving. For me, the one thing I can never seem to get right is regular expressions. If you ask me to write any regex function, I'll likely have to double-check that I'm doing it right, and there's a good chance I didn't get it right the first time. My journey to reviewing my regular expressions always takes me to regex101. However, I've found myself using this site far less with GitHub Copilot since it gives me what I need to get things working.

Here's a recent example from another TestCafe test suite. I wanted to create a selector to locate a dynamic element with an ID with a randomized string. Copilot can take care of the regular expression in the selector for me. I'll take it even further by using the generated selector to run a few assertions as well.

Just like using Copilot to cover the initial boilerplate of a project, these little snippets save me so much time by providing suitable prompts to refresh my memory. Doing a Google search for the correct regex or looking up some syntax takes a minute or less. Still, it requires a bit of context switching by leaving your code editor and potentially falling into the distraction trap, as it tends to happen with me. Gradually, the time you save with these quick reminders adds up.

Autocomplete with context beyond your project

I'm lazy, so one of the primary functions I use in my code editor is autocompletion. Any decent IDE these days has built-in autocomplete functionality to help you write your code faster. There's also a thriving plugin or addons ecosystem for most code editors to extend this functionality to fit your existing projects. You'll likely find something that works well enough if the built-in functions are lacking for your project.

Traditional autocomplete functionality scans and analyzes your existing codebase to provide relevant suggestions. GitHub Copilot takes this functionality a step beyond by not only using your codebase but also using the code in publicly-available repositories that are similar to your project. This extra context often yields additional suggestions that will help you create better automated tests.

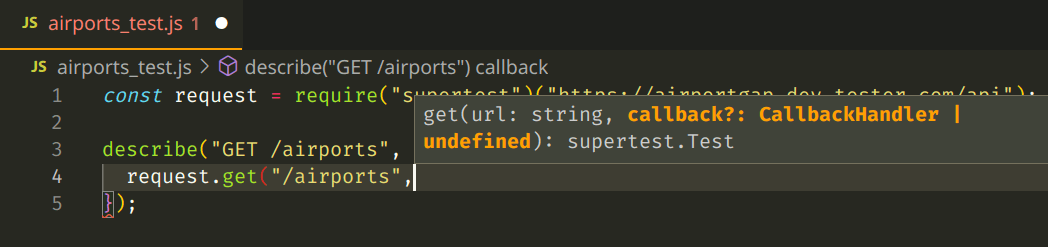

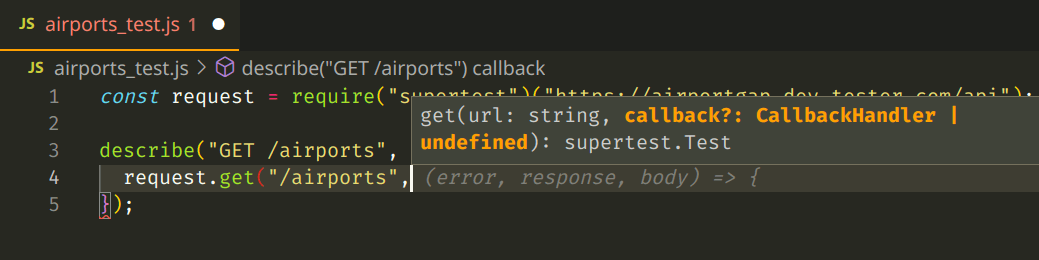

For instance, let's say I'm writing API tests using JavaScript libraries. With VS Code's built-in autocomplete functionality, I can see which parameters I need to construct my library's functions and assertions. In this example, I'm using the SuperTest library for my testing, and VS Code uses the library's annotations to show me how I can use the get function and displays its parameters.

Depending on the library, you'll have plenty of documentation for completing your function. But I want to use callbacks as part of the get function in the test. The information provided by VS Code says there's a callback that can either be of the type CallbackHandler or undefined. However, it doesn't say what CallbackHandler is. I'm guessing it's a function like a typical handler in JavaScript, but I need to know what parameters it expects. I'm left going back to the documentation to make sure what I need.

With GitHub Copilot, I'll get the same documentation and autocomplete functionality as shown above, but it will also provide me with a real example of how to use it:

Although this is a subtle change and doesn't show me what I can do with the callback function, it shows me the parameters it would typically expect, which is more than enough to get me moving. This functionality is particularly beneficial when I'm unfamiliar with an existing test automation framework since it gives me actual code that I can verify quickly instead of relying on trial and error. This form of autocomplete gives me a huge productivity boost in creating robust tests for my projects in a fraction of the time.

Will AI take my job as a developer or automation tester?

Ever since ChatGPT and tools like GitHub Copilot became mainstream, the main question almost every developer or tester asks is whether these tools are good enough or will become good enough to replace them. It's a valid thought to have, especially since we're beginning to see reports of organizations that are letting workers go because of these tools.

As for software development and test automation, however, tools like ChatGPT and GitHub Copilot won't entirely replace developers or testers, at least if organizations want well-written and effective code or tests. Copilot and similar tools could be better at the suggestions they provide. Despite finding value in the service, I've seen that it often offers ineffective or outright invalid code. You may have spotted in the examples I provided above that the suggestions given by Copilot work but can be more efficiently written. Sometimes it gives code that looks like it should work, but it has subtle bugs that aren't readily visible to even experienced coders.

Most of these services use existing context from your project, but only if you're explicit about it. AI still needs to catch up to all of the complex nuances that only humans can grasp and parse well. That might rapidly change as these services learn more with all the data it gathers from users like me, but the jury's still out as to whether that change will occur sooner rather than later.

My main concern about GitHub Copilot

My main concern about GitHub Copilot isn't that it sometimes gives you the wrong thing. My main worry is that less-experienced developers and testers rely on these tools to do their work, blindly using the code that Copilot spits out without verifying whether it works or suits their needs. It's leading teams to churn out inefficient code that, at best, works now but will inevitably lead to troubles down the road.

I've already seen this issue first-hand on a team I'm working with. I reviewed a pull request from a less-experienced developer, and their code had some bugs that I caught rather quickly. This developer left some comments in the affected areas of the codebase. I copied those comments and ran them through GitHub Copilot on a clean branch in my local system. Sure enough, Copilot gave me the same broken code this developer attempted to commit to our repo. The evidence was clear that he used Copilot's suggestions without pausing to consider whether they were suitable for our systems.

If you're a less-experienced developer or automation tester, tools like GitHub Copilot can help you become a better coder by giving solid examples of how to accomplish specific tasks. However, just because you get a response that looks functional doesn't mean that it is. It might work now, but think about how it affects the codebase as a whole. Use these opportunities to learn from what the AI gave you, and make sure you understand what the code does before attempting to ship it.

Summary

The world of Generative AI—particularly ChatGPT—is one of the main topics of discussion around the globe. These tools are becoming increasingly popular, with seemingly dozens of products integrating these services in some form every day. The reason behind this sudden popularity is that these tools provide excellent value for specific needs. Whether you're a developer, a salesperson, or a marketer, you'll likely find a handy way to use these tools to improve your skills and business.

As a developer and tester, the AI-based tool I've been impressed with lately is GitHub Copilot, a subscription-based tool that offers autocomplete-style suggestions based on your project and a vast array of public code repositories. I was skeptical of Copilot at first, but it's been a very helpful tool in my daily work. It's saved me countless hours by handling boilerplate code, refreshing my memory on infrequently-used functions, and giving some solid suggestions that improved my code.

GitHub Copilot and other AI-based coding tools won't replace developers or testers any time soon, but there's a concern about less-experienced coders relying too heavily on them. GitHub Copilot is a valuable tool when used correctly, but as developers and testers, we must take care of our coding practices to ensure we don't create complications down the road. I'd recommend giving Copilot a try—you'll likely be as pleasantly surprised as I was.