When we create automated tests for a web application, we usually only verify that things are working as the developers intended. We follow the "happy path" filling out any forms correctly, using inputs like correct emails, capitalized names, and passwords that follow the system's requirements.

Unfortunately, the real world is messy. People will use your application in ways you never imagined. You'll be surprised at the number of ways users can abuse your app, whether it's due to inexperience or maliciousness.

For the most part, it's okay to rarely write tests covering the "unhappy path," especially when writing end-to-end tests since they tend to run slower. However, it does serve to have a couple of tests to validate particular input that wouldn't get used in normal conditions but could cause all sorts of issues.

One conventional tool that testers use to check out if your application can handle odd information is the Big List of Naughty Strings. This article talks about what the list is, how it helps keep your application secure, and ways to automate these tests.

Why test form inputs on web applications

When you have a public-facing web application that allows people to input data and submit it in a form, developers don't have control over what comes in. They have to ensure that whatever comes in won't wreak havoc with the system. The practice of cleaning up after user input is known as data sanitation.

The issues that may occur on unsanitized data can range from harmless inconveniences such as a page section displaying incorrect text, to the very serious like someone gaining access to your entire application and network. These are a few common vulnerabilities that user input can cause.

Cross Site Scripting (XSS)

Cross Site Scripting, or XSS, is an attack that injects malicious code onto a trusted website.

In web applications, the malicious code is often in JavaScript, which can get executed on someone else's machine without them realizing it if the app doesn't sanitize its inputs. This code can lead to different attacks like stealing their credentials from their browser cookies or redirecting them to an untrusted site.

The way this works on improperly protected sites is when a malicious user submits some input that gets rendered as JavaScript. When another user lands on the page that contains the JavaScript, the browser happily executes the code. Since it comes from the same site, the browser deems this page as a trusted site.

To deal with XSS attacks, web applications need to properly sanitize any input coming from an external party before displaying it to other users. The most common way to nullify the attack is by replacing certain characters that may trigger code execution on a browser (such as ", <, and >) by their HTML equivalents (", <, and >, respectively). The page renders the same, but the browser won't get tricked into thinking it needs to execute this code.

Cross Site Request Forgery (CSRF)

Cross Site Request Forgery, or CSRF, is a type of attack that tricks a browser into performing actions on behalf of an authenticated user on a website.

Most web apps use cookies to store information about a user's session to keep track of whether a user is allowed to perform requests on the server. Browsers automatically send those cookies for each request. Unfortunately, it can also allow a malicious user to use their cookies to perform actions on someone's behalf if the application does not protect against CSRF attacks.

Let's say you're logged in to a web app for a game under the domain awesomegame.com. You click on a link to a direct message sent by a malicious user. The malicious user included an image tag that points to a URL within the web app that acts on your behalf. For example, the image tag is <img src="https://awesomegame.com/account/delete">. The browser, thinking it's a regular image, sends a request with your cookies to that URL. If the site doesn't protect its users against CSRF attacks, the server performs the request, and your account gets deleted without your consent.

There are a few ways web applications handle these attacks. Some frameworks, such as Ruby on Rails, have CSRF countermeasures set up by default. Most other frameworks that don't have CSRF countermeasures by default have libraries to help against these attacks, like csurf for Express and Node.js.

SQL Injection

SQL Injection is an attack where users can manipulate a form into performing database queries for their gain.

In database-driven web apps, users can perform actions from a form that accesses the database in some way. For instance, logging in to a website sends a query to read the database to find the credentials submitted via a form. Usually, these database queries are specific for the action, like SELECT * FROM users WHERE email = '[email protected]' AND password_hash = 'abcd1234';. If the database finds a record, the application authenticates the user. However, a malicious user with a bit of SQL know-how can manipulate this query by submitting specially-crafted inputs.

In the previous example, a user needs to enter their email address and a password to validate their credentials. On an unprotected app, someone can enter something like 'OR 1 --, which the server processes as SELECT * FROM users WHERE email = '' OR 1 -- AND password_hash = 'abcd1234';. On most SQL database servers, everything after two hyphens in a query signifies a comment and isn't processed. That means that when the query checks the OR 1 segment, it returns all records, tricking the application into authenticating the user.

The best way to handle SQL injection is to sanitize any input coming from a user before running any database queries. Most web application frameworks nowadays use ORMs that can handle your data before querying the database. Still, developers need to remain alert on what data interacts with the database, especially if the data is coming from someone else.

What's the Big List of Naughty Strings, and how can it help against these attacks?

The Big List of Naughty Strings is, well, a list of text strings. However, it's no ordinary list. It has a unique purpose for testing. The strings compiled in the list are a collection of data that, when processed under certain circumstances, can cause problems in a web app.

The list contains various types of strings that can trigger unwanted behavior in your application. Some strings can get used for simple tests such as checking if a form allows and correctly displays Unicode characters for different languages. Other strings are appropriate for security checks like the ones described above. There are even strings that check platform-specific bugs, like the strings that crashed iOS devices in the past.

There are over 500 different strings on the list, so there's plenty to choose and test on your applications. Just open a copy of the list, copy a line of text, and paste it to any input that you can submit to the server. It's a quick and easy way to find issues that developers and testers rarely think about during routine testing.

Still, what fun is it to copy and paste these random strings by hand? Let's experiment with taking the Big List of Naughty Strings and creating automated tests, so we don't have to do all the copying and pasting by hand.

Automate checking naughty strings

To demonstrate how the Big List of Naughty Strings can help catch issues with form inputs, I created a simple web app called the Naughty String Checker. It's a simple web application that allows a user to input a string, submit the form, and see how it renders on the application.

While this web application serves the purposes for the rest of this article, there are a few drawbacks. The Naughty String Checker app does not reflect most real-world applications. It only does one single thing with no database on the backend, and no sessions or cookies to manage.

Another point to note is that I built the app using the Ruby on Rails framework, which has a lot of excellent security measures implemented by default. Out of the box, it handles data sanitation, adds security tokens to thwart CSRF attacks, and much more. To have it work for this example, I'm purposely not sanitizing the input when displaying it on screen.

For our test framework, we'll use TestCafe to run a few examples using the Big List of Naughty Strings. The article assumes you have TestCafe set up and running on your local environment. Check out the previous Dev Tester article on getting started with TestCafe if this is your first time using TestCafe. You can also follow along if you use another test framework and apply the same concepts.

First, we'll create a simple test to ensure the website is working as expected. The Naughty String Checker application has a single text field which a user can input any string they want. When the user submits the form, it displays a page indicating the string they entered. If we see the exact string as submitted in the input field, it means the string isn't causing any problems.

Let's write a quick TestCafe test to ensure this works as described. We'll create a directory called tests for storing our TestCafe files. Inside our test directory, we'll create a new file called string_check.js and write our test:

import { Selector } from "testcafe";

const stringField = Selector("[data-testid='string-field']");

const submitButton = Selector("[data-testid='submit-button']");

const stringResult = Selector("[data-testid='string-result']");

fixture("Naughty String Checker")

.page("https://naughtystrings.dev-tester.com");

test("Show a submitted basic string after submitting the form", async t => {

await t

.typeText(stringField, "This is a basic string")

.click(submitButton);

await t

.expect(stringResult.innerText)

.contains("This is a basic string");

});

The test loads the Naughty String Checker application (located at https://naughtystrings.dev-tester.com), inputs "This is a basic string" in the text field on the page, clicks the submit button, and checks on the next page that the string is inside an element with a test ID of string-result. Let's run this test on Chrome with the following command:

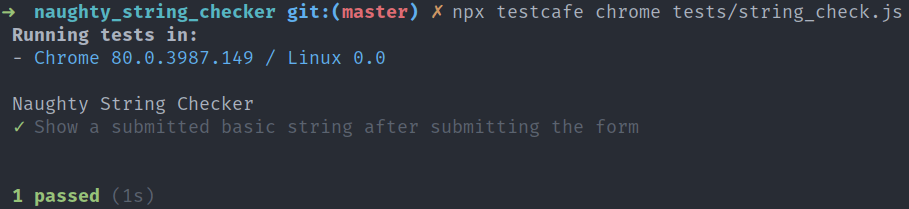

npx testcafe chrome tests/string_check.jsThe test should pass successfully:

Now that we have a test to check the "happy path," let's introduce the Big List of Naughty Strings.

There are a few versions of the Big List of Naughty Strings around the Internet. The source of these strings for this article come from this GitHub repo, which is one of the first and most popular sources of this list. The repo includes a JSON file, set as an array which we can use to access the strings easily in our tests using JavaScript.

We first download the JSON file and place it inside our tests directory under the name blns.json. With the file in the directory, our next step is to read the file and parse the strings contained within so we can use it in our tests.

Since the foundation of TestCafe uses Node.js, we can use Node.js modules to read and process the JSON file. We can do this processing before running our tests. After the first import line in the file, we can import the necessary Node.js modules, read the JSON file, and parse it so we can access the array inside our code:

// Node.js modules.

import fs from "fs";

import path from "path";

// Process the Big List of Naughty Strings.

const rawData = fs.readFileSync(path.resolve(__dirname, "blns.json"));

const naughtyStrings = JSON.parse(rawData);The fs and path modules come from Node.js. fs exposes an API to allow us to interact with the file system, and path introduces utilities to work with files and directories.

Next, using fs.readFileSync, we read the JSON file. In this command, we're also using path.resolve to resolve the path of the JSON file relative to the location of the test file. Without path.resolve, Node.js looks for a file inside the current directory where we run our tests, which might not be where it can find the JSON file.

After reading the file, it stores the raw data in the rawData variable, since reading the file using fs.readFileSync returns it in binary form. We can process this raw data using JSON.parse, which processes the file into a JavaScript object that we can use in our tests.

Now that we have our list of naughty strings in a usable format for our tests let's start using some of those strings as part of our test automation. Inside of the string_check.js file, let's add one more test:

test("Check a random naughty string when submitting the form", async t => {

const randomString = naughtyStrings[Math.floor(Math.random() * naughtyStrings.length)];

await t

.typeText(stringField, randomString)

.click(submitButton);

await t

.expect(stringResult.innerText)

.contains(randomString);

});Our new test looks similar to the first test, except for using a random string from the Big List of Naughty Strings. We're checking the array of strings we processed from the list, grabbing a random item inside the array using plain JavaScript, and storing it in the randomString variable. We then use the string in this variable as input and for asserting our variable.

As before, if we see the random string in the test, the string isn't causing any problems, so there's no alert to raise. However, if we don't see the string, we found an issue on the application. Depending on how well-protected a web application is against different types of vulnerabilities, you might not encounter any errors for these strings.

In the case of the Naughty String Checker app, some strings will cause issues since they're not getting adequately sanitized. If your random string happens to be one of those strings, the test fails. That failure indicates that the application contains a potential security vulnerability that developers need to address.

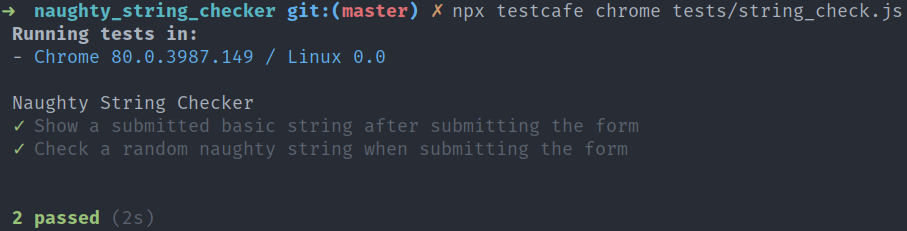

Running the test a few times shows things are going well:

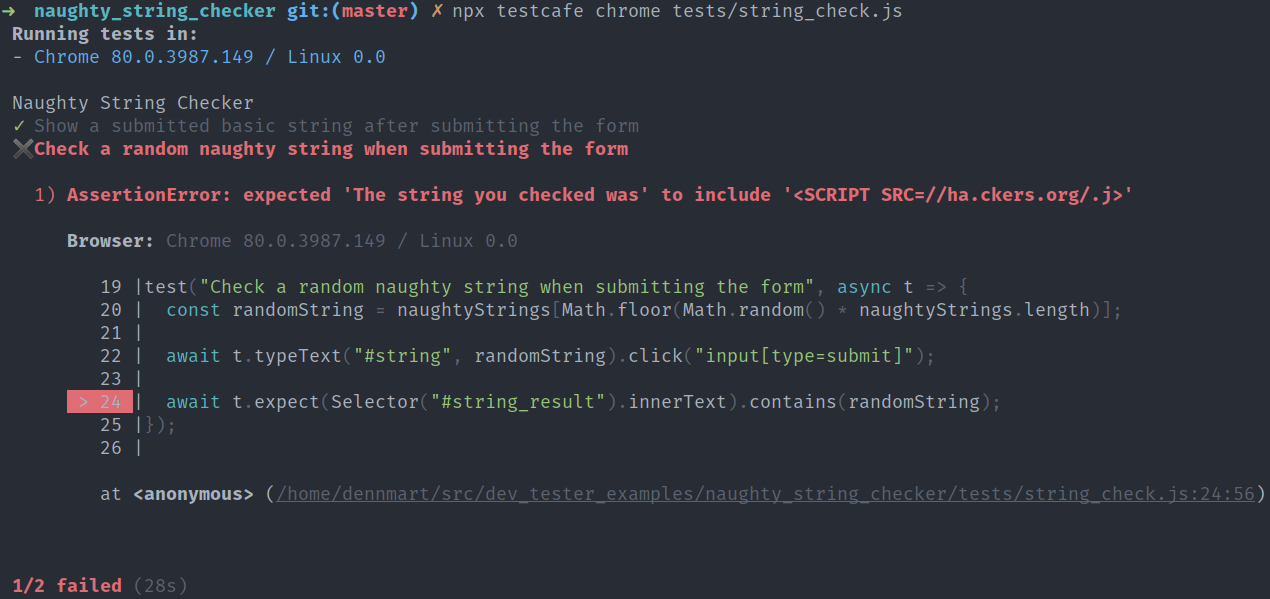

When it runs into a string that causes issues in this application, such as a <script> tag, you'll see which string caused the problem:

Seeing the test failure helps to see which strings are causing problems in the application. In the test run shown above, the string <SCRIPT SRC=//ha.ckers.org/.j> caused a problem since the browser didn't sanitize this string and executed the script - a potential CSRF or XSS attack.

How to use in the real world

The examples above help to demonstrate how something that is often overlooked, like displaying unsanitized user input, can contain flaws. However, these examples don't reflect the use of real applications. Most modern web applications connect to a database and have lots more interactivity from a user standpoint, and deal with different security concerns. How can you use the concepts shown in this article using the Big List of Naughty Strings in your real-world application?

At the beginning of this article, we mentioned that testers often use this list manually. That's a good start if you're not doing this kind of testing during manual or exploratory testing phases. If you're a tester or developer, you should consider getting into the habit of grabbing a few strings from the list and checking your application's forms.

It's better to handle these checks using automation, as shown in the examples here. When you automate testing your app with these strings, it frees you from the manual steps needed to perform these kinds of tests. Depending on your testing process, you can test a single string or go through multiple strings for variety.

If you decide to go the automation route, I wouldn't recommend running these tests after each change, since it introduces an element of randomness. These randomized tests are a perfect candidate to run less frequently, such as in nightly builds.

Regardless of how you decide to test, you don't need to check each string in the list. Many of the strings behave in the same manner, and some won't apply in your situation. One good tip is to roll a customized version of the Big List of Naughty Strings for your needs, covering strings that apply in your situation.

Summary

While testing our web applications, we often follow the "happy path" to ensure the application is working as expected. However, you never know what kinds of input users try to submit to your applications. Someone can send something to your application that causes unintended consequences.

Sometimes it's an honest mistake, and no harm comes out of the situation. But other times, a tiny unpatched segment in your code can cause significant damage to your application, your users, and even your organization. There are plenty of vulnerabilities that malicious users can exploit if your application doesn't handle insecure input.

As testers and developers, we need to ensure our applications are secure and working as expected. Using tools like the Big List of Naughty Strings helps expose unexpected behavior and destructive actions by using text that reveals problems.

You can check some of these strings during manual and exploratory testing. You can also automate these checks to make it easier to spot potential trouble. Finding and squashing these issues before they get to the hands of your users can save tons of headaches for your organization in the future.

Have you or your team used the Big List of Naughty Strings as part of your testing process? What other ways have you used the list to keep your applications running smoothly? I'd love to know - leave a comment below!

Want to boost your automation testing skills?

With the End-to-End Testing with TestCafe book, you'll learn how to use TestCafe to write robust end-to-end tests and improve the quality of your code, boost your confidence in your work, and deliver faster with less bugs.

Enter your email address below to receive the first three chapters of the End-to-End Testing with TestCafe book for free and a discount not available anywhere else.