If you spend any amount of time writing end-to-end tests for a web application, the inevitable will happen - your tests will fail when you least expect them to fail. No matter how much time you spend covering every angle to avoid the test from failing, it will happen sooner or later.

End-to-end tests have a reputation for failing even when nothing has changed since the last time the test suite passed successfully. Since these kinds of tests have many moving parts, any bump in the road through your test execution - external dependencies, network connections, database queries, and so on - causes your all-green test suite to get littered with red marks.

Thankfully, we're not left to fend for ourselves when these issues happen. Most modern testing frameworks have multiple ways to check what's going on during a test run. As a tester, you have plenty of tools at your disposal to dig into why a test failed.

TestCafe is no exception. Out of the box, TestCafe contains some excellent methods to figure out what's happening during the execution of your test suite. Here are three easy and useful ways you can debug your TestCafe tests.

1. Take a screenshot or video

Sometimes a test error leaves you scratching your head because the state of the application at the time of the error is way off your expectations. For example, a common issue is expecting an element to be on the page, but the test fails because it's not there.

An excellent way to see the current state of a given page is by taking a screenshot. TestCafe has support for taking a screenshot at any point during a test run. All you need to use is the takeScreenshot action where you want to capture the existing state of the application under test.

In the following example, we take a screenshot after the test fills in and submits the login form. Now we'll have a snapshot of the account page after logging in.

test("User can log in to their account", async t => {

await t

.typeText(loginPageModel.emailInput, "[email protected]")

.typeText(loginPageModel.passwordInput, "airportgap123")

.click(loginPageModel.submitButton);

await t

.takeScreenshot()

.expect(loginPageModel.accountHeader.exists).ok();

});If you take any screenshots when running a test, TestCafe will display the path of the screenshots in the test results report in your terminal.

By default, it stores the screenshots inside the screenshots sub-directory inside the current directory and names the files by timestamp and current browser and operating system. You can change this path by specifying the path in the command line.

You can also specify the exact name of the screenshot by passing in the path option as a parameter to the action:

test("User can log in to their account", async t => {

await t

.typeText(loginPageModel.emailInput, "[email protected]")

.typeText(loginPageModel.passwordInput, "airportgap123")

.click(loginPageModel.submitButton);

await t

.takeScreenshot({

path: "images/logged_in_page.png"

})

.expect(loginPageModel.accountHeader.exists).ok();

});Also, the takeScreenshot action takes screenshots of the current viewport by default. If the page is longer than the current screen size and you want to take a screenshot of the entire page, you can set the fullPage option to true:

test("User can log in to their account", async t => {

await t

.typeText(loginPageModel.emailInput, "[email protected]")

.typeText(loginPageModel.passwordInput, "airportgap123")

.click(loginPageModel.submitButton);

await t

.takeScreenshot({

fullPage: true

})

.expect(loginPageModel.accountHeader.exists).ok();

});Taking a screenshot in a test helps with debugging a specific issue, but you need to add it to your test code manually. A more useful way of taking screenshots with TestCafe is to add the takeOnFails command line flag, so you'll automatically get a screenshot when a test fails. Keep in mind that TestCafe takes the screenshot at the point of failure, which may not show the problem.

Besides static images, TestCafe also has support for recording video of the test run by specifying the --video command line flag when running your tests. The only prerequisite you need is FFmpeg or installing the node-ffmpeg-installer in your project, which sets up FFmpeg for you.

With the --video flag, all of the tests in the specified test run get recorded into individual MP4 files. You can change some basic video options such as recording only failed tests or record the entire test run in a single file. You can also change the video encoding options if you want more control over the video files.

Taking screenshots and videos helps make reporting bugs much easier since you can attach them to any tickets to assist the development team in tackling the issue. It's also useful when running TestCafe in a continuous integration system. Since CI systems run your tests in headless mode, a screenshot or video is often your only means to figure out why a test failed.

2. Slow down test execution

When TestCafe executes your tests, it runs each step in rapid succession. It goes through each action, and as soon as the action finishes, it immediately moves on to the next thing. Depending on your system, you might not catch what's happening during the test execution because it runs through everything in a flash.

To make it easier to observe what's going on in your tests when you're running it on your system, TestCafe provides the option to adjust the speed of your tests. Using the --speed command line flag, you can tell TestCafe to run at a slower speed.

The --speed flag accepts a number between 1 - the fastest speed, which is the default - and 0.01 - the slowest speed. The distinction between fast and slow depends on your system and browser, but the way I've observed it to act is that a speed of 1 means TestCafe runs everything as quickly as possible while 0.5 is about half the speed. You'll need to experiment to see what works well for your system.

Using the command line flag slows down the entire test. However, sometimes you just want to slow down specific problematic actions instead of the whole test. For instance, you may have a text field bound to a JavaScript function, and all you want is to have the test type in the field slowly for debugging purposes.

TestCafe handles these scenarios by allowing you to specify an optional speed option for different actions. Taking the previous code as an example, here's how you can tell TestCafe to type the email address at roughly half the speed:

test("User can log in to their account", async t => {

await t

.typeText(loginPageModel.emailInput, "[email protected]", {

speed: 0.5

})

.typeText(loginPageModel.passwordInput, "airportgap123")

.click(loginPageModel.submitButton);

await t.expect(loginPageModel.accountHeader.exists).ok();

});Slowing down your tests is most effective when you're running the test in your system, where you can keep an eye out on things. Usually, it's not useful to adjust the test speed on a headless environment like a CI system. However, there are cases where manipulating the speed helps in those environments, like for debugging a race condition that causes your tests to fail occasionally.

3. Pause your tests and check the browser

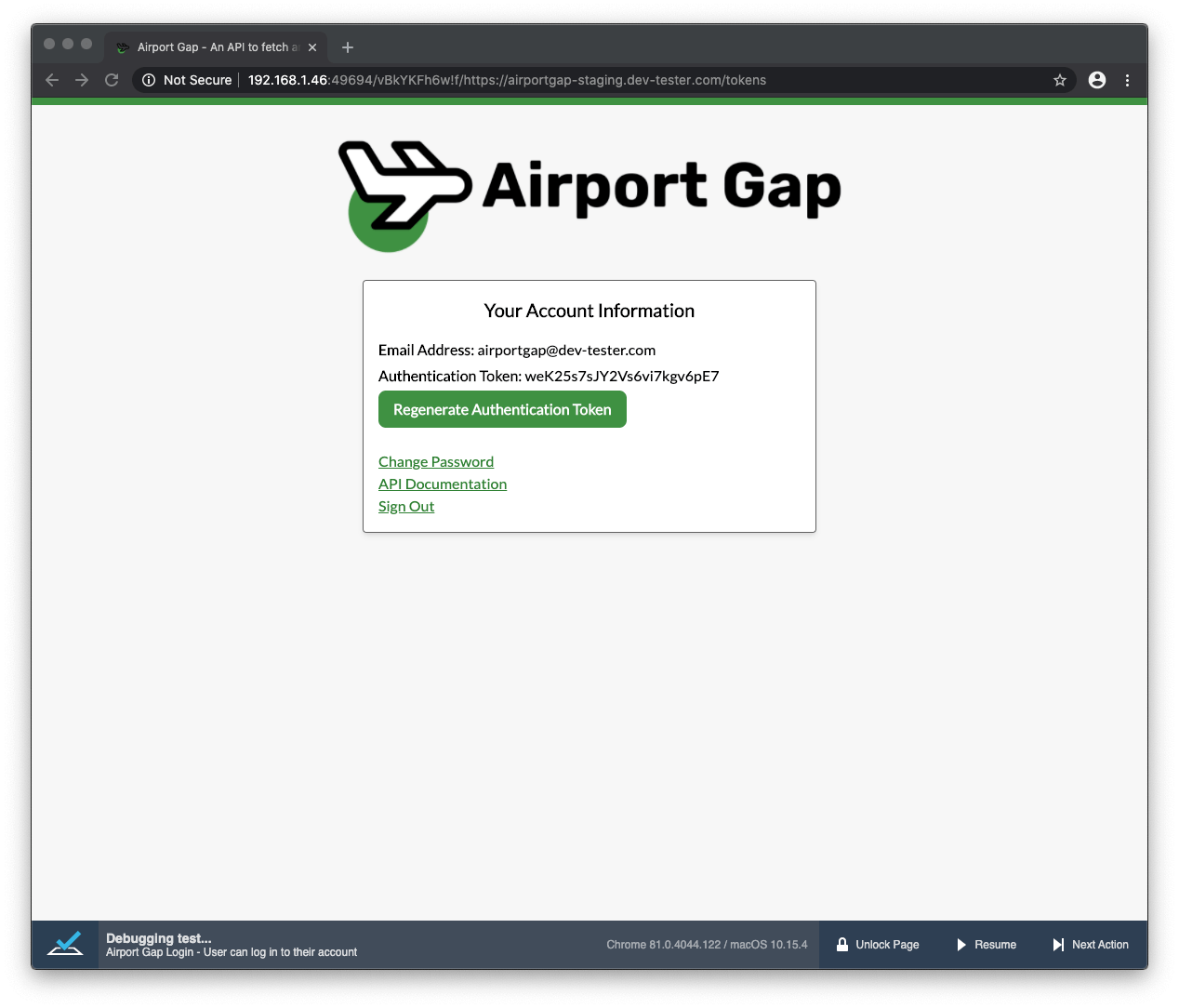

Sometimes you'll run into problems with a test that you can't uncover using screenshots, videos, or slower execution speed. In these scenarios, TestCafe lets you pause the test at any point and allows you to inspect and interact with the page in its current state on the browser.

In your code, you can add the debug method to tell TestCafe to halt the test execution in the browser, as in the example below:

test("User can log in to their account", async t => {

await t

.typeText(loginPageModel.emailInput, "[email protected]")

.typeText(loginPageModel.passwordInput, "airportgap123")

.click(loginPageModel.submitButton);

await t

.debug()

.expect(loginPageModel.accountHeader.exists).ok();

});In this example, the test runs as usual. When it reaches the point of the execution where it executes the debug method, the test pauses and displays a few useful buttons in the footer of the page to help you deal with any issues.

When the test lands in debug mode, the page is "locked," meaning that you can't interact with any elements on the page. The "Unlock Page" button allows you to unlock the page so you can click around and see what's happening. The "Next Action" button enables you to step through the next steps in your test one by one. Finally, the "Resume" button continues with the test execution as usual.

Besides using the debug method in your code, you can also specify the --debug-mode command line flag when executing your test. This flag pauses each test right at the start, before any actions or assertions occur. It's useful if you want to halt a specific test from the beginning to step through each action to see what's happening.

Checking out a problematic test using TestCafe's debug method is helpful because it allows you to dig deep into the state of the page using the browser's developer tools. For example, you can check if any JavaScript errors cause the app's front-end to break, or if a network request returned the data you're expecting.

Summary

No matter how much time you spend writing your end-to-end tests, they will fail at some point for no good reason. It's an inevitable fact for these kinds of tests since you're putting the entire application through its paces to ensure everything is working in harmony. It can get frustrating, especially when these failures seem entirely random and happen at the most inopportune times.

However, you don't have to waste tons of time staring at your test code to figure out what's wrong. Most testing frameworks have excellent support for debugging your tests. TestCafe is no exception with its superb debugging options out of the box.

With TestCafe, you can take screenshots and videos of your test runs to see precisely what's happening at any given time, which is particularly handy in continuous integration systems. You can slow down the test execution to observe how TestCafe interacts with your app or smoke out a potential race condition. Also, you can pause tests at specific points and dig deeper into the application using the browser's developer tools.

These debugging methods will get you far in getting to the root of a test's flakiness. However, TestCafe has a few more debugging tricks up its sleeve - look for a future Dev Tester article covering these.

What are your preferred ways to debug flaky tests? Share your tips and tricks in the comments below!

Want to boost your automation testing skills?

With the End-to-End Testing with TestCafe book, you'll learn how to use TestCafe to write robust end-to-end tests and improve the quality of your code, boost your confidence in your work, and deliver faster with less bugs.

Enter your email address below to receive the first three chapters of the End-to-End Testing with TestCafe book for free and a discount not available anywhere else.